In every corner of our economy, Artificial Intelligence is rewriting the rules, revolutionizing industries, and redefining possibilities. But as AI systems become more powerful, a crucial question looms: can they be trusted?

Trustworthy AI isn’t just a technical challenge—it’s a societal imperative. Over my years in technology strategy, I’ve seen the transformational potential of AI firsthand. But I’ve also seen how a lack of trust can undermine adoption and stifle innovation.

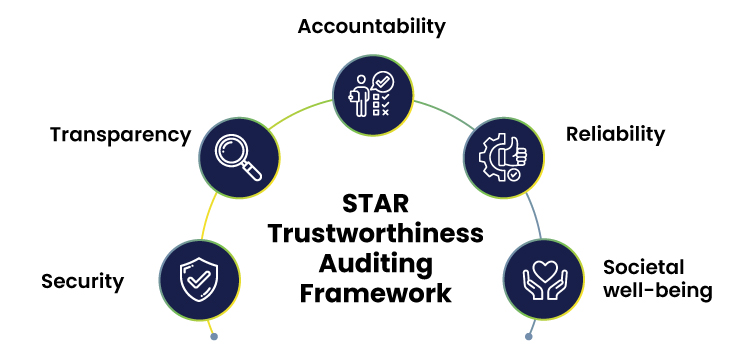

At Charter Global, we believe that trustworthy AI must be built on a strong foundation. That’s why we developed the STARS framework—focusing on Security, Transparency, Accountability, Reliability, and Societal well-being.

AI isn’t just a tool; it’s a decision-maker. Whether recommending medical treatments, approving loans, or optimizing supply chains, AI systems wield immense power. But this power comes with risks:

These risks aren’t just theoretical—they’re real. Addressing them head-on is the only way to leverage AI’s full potential.

Data is the lifeblood of AI. But without robust security measures, it’s also a vulnerability. Trustworthy AI systems must safeguard user data, ensuring it is protected against unauthorized access or misuse.

For example, encryption and advanced authentication protocols are vital to protecting sensitive information, such as medical records or financial details.

Imagine using an AI system without knowing how it works. Frustrating, right? Transparency ensures that users and stakeholders can understand AI’s decision-making processes.

Explainable AI offers clarity, enabling users to trace decisions and identify potential errors. This is especially critical in industries like healthcare, where AI recommendations can impact lives.

AI doesn’t operate in a vacuum. If it makes a mistake, who’s responsible? Trustworthy AI ensures clear accountability.

This involves creating governance structures, audit trails, and mechanisms to address harm. When organizations take responsibility for their AI systems, they build confidence among users.

AI must perform consistently—even in unpredictable environments. Whether it’s an autonomous vehicle navigating a busy city or a fraud detection system analyzing millions of transactions, reliability is non-negotiable.

Trustworthy AI systems are rigorously tested to ensure they can handle unexpected inputs and remain resilient to errors or attacks.

AI must work for everyone—not just a select few. That means designing systems that prioritize fairness and equity.

By using diverse, representative data sets and implementing checks for bias, we can create AI systems that deliver equitable outcomes. Beyond fairness, trustworthy AI should actively contribute to societal progress, from improving healthcare access to addressing climate change.

Building trustworthy AI isn’t just about principles—it’s about action. Here’s how organizations can put the STARS framework into practice:

Take fraud detection in the financial industry. Traditional systems often flag transactions based on rigid rules, leading to false positives. But AI systems, trained on massive data sets, can identify fraud patterns in real time.

Here’s how the STARS framework ensures these systems are trustworthy:

The result? Faster, more accurate fraud detection that customers trust.

AI holds incredible promise—but only if people trust it. By focusing on Security, Transparency, Accountability, Reliability, and Societal well-being, we can ensure that AI systems are not just innovative but also ethical and effective.

At Charter Global, we’re committed to building AI solutions that empower organizations while respecting the people they serve. Let’s work together to create AI that drives progress responsibly.

Book a Consultation.

[email protected] | +1 770-326-9933.